This section describes our most commonly used methods of collecting data, the types of data that we collected, how we used it, and some important constraints to consider, particularly when interpreting our research questions. It also looks at how we arrive at our research questions to begin with, and what we do with our research findings.

You hear a lot about breaking down silos in the public sector. Often in government, research is done by a separate team than those leading on experimentation, which are both separate from the development cycle of actual product delivery.

While breaking down silos between these areas if work is important, at Talent Cloud we went in a different direction, by putting each of the components of our development cycle in one place (see our Team Operations section). This helps us to work efficiently, keeps us on the same path, and promotes accountability across the whole product team.

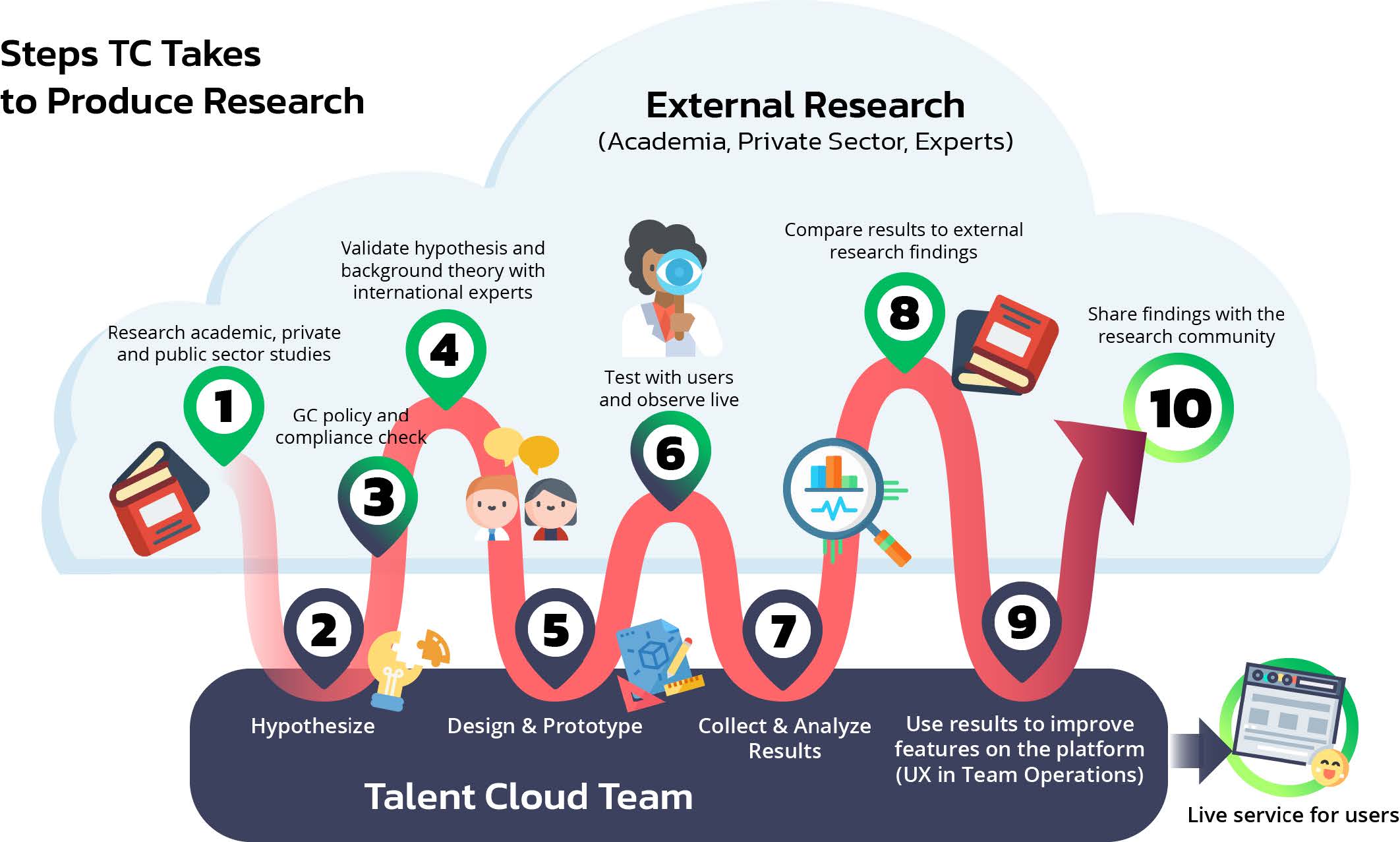

So how does the research cycle map into the team’s development cycle? Here’s how our research cycle works, beginning with looking up what’s out there beyond government and ending with contributing findings back to the broader community.

The platform and features that make up Talent Cloud can be broken down into a series of experiments. Each of those experiments began with external research. Sometimes there were widely adopted practices or standards that we could use, but sometimes the answers weren’t so easy to find.

With our initial desk research in hand, we moved on to running pilot projects with real people and job processes to test our hypotheses, all while running compliance checks against government policies. While we haven’t had the sample size to run randomized controlled trials, there are many, albeit less powerful, research methods available for this type of work. We also didn’t lean on a single approach, but instead adapted to identify the best tool for the job. Our methodologies draw from behavioural psychology, lean process re-engineering, human-centered design, and old school qualitative and quantitative research methods.

Once we had our pilot results, we went back to external data to validate our findings. Comparing or benchmarking results is always important, but it’s especially so when you’re dealing with very small sample sizes, as we were with jobs posted to the site. It also helped us better understand the machinery and behaviours that shape staffing and talent recruitment.

Over the course of the project, we collected a huge amount of qualitative data. We conducted workshops with every user group before we started to design anything. Once we started to design, we tested wireframe prototypes early and often, observing whether people were using features the way we thought they would, and then talking to them about what they liked or didn’t. We also took them to presentations with us, when we were talking to our partner departments, or to the general public, and asked people to tell us what they thought.

We also didn’t stop collecting qualitative data on features once they were launched. Our Project Coordinator observed how the platform was being used, and flagged lots of issues that we were able to fix quickly, because we were watching. They also regularly compiled emails from users where patterns were identified and sent them to our user experience designers.

We also tried really hard to talk to everyone who used the platform, to get their thoughts. A lot of these were semi-structured interviews. Fairly early on, we developed an interview guide to help to focus the conversation of some of our key questions, but we left lots of room to digress and follow our participants' train of thought.

We also sent out surveys both to managers that posted jobs on the site, and the applicants to those jobs. While there were some quantitative questions on these forms, we got some of the richest information from the open text boxes.

GC HR authorities collect a significant amount of data on the hiring process, which helps the GC understand trends and priorities. That said, when we looked at our two primary research goals (time to staff and diversity/culture fit) a lot of the questions that we had related to issues where data was either not collected or we weren’t able to easily access findings (e.g. through the Open Government Portal).

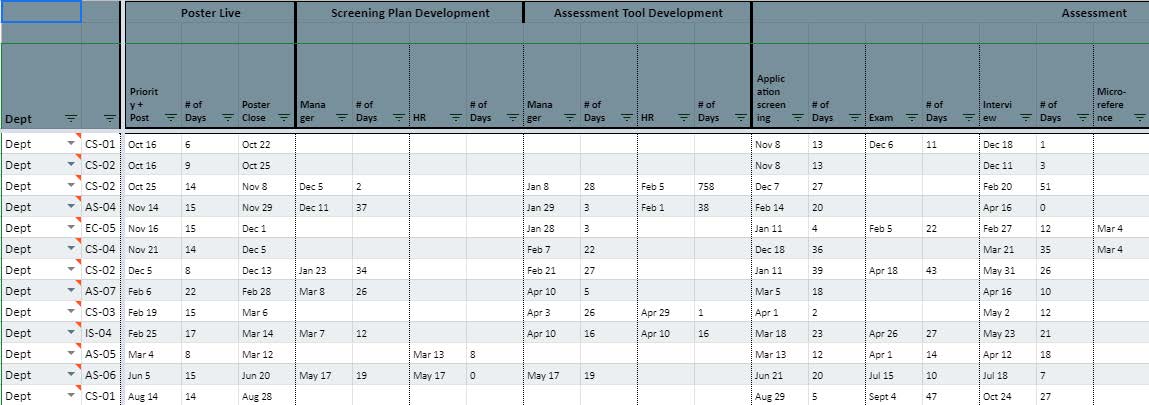

Data about real staffing processes that were run on Talent Cloud was an important line of evidence that we used to test our research questions. To do that we collected data on staffing that wasn’t readily available at this level of detail anywhere else in the GC. We kept track of how long every single step of the hiring process took, as well as the number of applicants that passed each phase of the assessment process. For example, we looked meticulously at things like how many times a job advertisement draft is passed back and forth between HR advisors and managers, how many applicants applied on the last day of a job advertisement, and how machinery steps like translation and data entry impact the order and timing of approvals.

All of this data was logged manually in a seperate database by the system administrator. (Our plan had been that once the platform covered the staffing process end-to-end, this process would be automated using log files.) Having access to this detailed information helped us identify where processes bog down, and what required process improvements versus behavioural interventions.

Because of sample size constraints (53 jobs were posted since the platform went live, across many departments, classifications, and levels) conducting statistical analysis of the data is not yet possible. So, when we talk about our results on job processes, keep in mind that we’re talking about weak signals. We try to mitigate this by looking at multiple lines of evidence, and benchmarking our data whenever possible.

However, on the applicant side, we passed the 1,000 applicant mark, which is beginning to approach the volume that we need for statistically significant results. Here, too, our ability to test some of our research questions has been limited by the information we can collect. Without a Protected B server, we aren’t able to do things like collect Employment Equity data on the site, pushing our research on diversity into the qualitative data realm.

We don’t want to oversell our findings. We’re a small scale experiment and we don’t have a statistically sound sample size for running randomized control trials. While we’re cross-referencing external research with our quantitative and qualitative findings, our research results should be considered weak signals, not definitive solutions.

You can’t create evidence-based policy without a foundation of evidence. That was the goal of Talent Cloud.

When we present our results, we usually see a room of people nodding along. Whether they’re managers or HR Advisors, we find we’re confirming a lot of the things they’ve known for years but didn’t necessarily have numbers or stories to back it up. Often we hear the comment, “We knew that already. So what’s the next step?”

While nodding from a crowd isn’t a valid quantitative data point for confirming results, we follow up with interviews, group discussions and live testing of new features, which helps us validate the hard-to-validate hunches - long recognized but statistically unproven - at play in the GC staffing space.

After all, often “hunches” are just multi-year pattern recognition coming forward from experts on the ground, like HR advisors and seasoned managers. And we take time to listen to these hunches and explore the behavioural psychology and systems design behind the issue, hopefully leading towards potential solutions.

The COVID Chilling Effect on Research

Like the rest of the world, COVID had a significant impact on Talent Cloud operations. When employees were sent home to telework indefinitely in mid-March, staffing all but ground to a halt. We had 10 job advertisements from April through June in 2019, but we didn’t post a single job during the equivalent time period in 2020. We also saw the job processes that were in the assessment phase slowed significantly, and we saw a greater proportion of jobs cancelled due to financial constraints by the department. (One side note to this: the few new jobs that did launch during the summer 2020 period were able to take advantage of platform upgrades, and as a result, we saw time to staff continue to come down significantly with these processes.)

2020 was the year we planned to significantly scale up, and get the sample sizes that we needed to put some statistical power behind our results. Instead, we wound up pivoting quickly to try to help facilitate internal talent mobility across the GC as government organized to support people, businesses, and shifting internal needs (Read more about GC Talent Reserve in Section 5 of this report).